Armed robots, autonomous weapons and ethical issues

Pere Brunet – Article published after the “Future Wars” Conference, CND, London – 10th November 2018

Business of war and the arms trade are highly profitable for the military industry and financial entities. Also, the strategy of the global ruling elite is based on a militarized national security that ends up favouring its businesses and benefits its large corporations while boosting climate change, depleting the resources of the global south, generating conflicts and impoverishing the vast majority of the world population. We are in fact in an undemocratic system which is controlled by about 800 people (Brunet 2018) and that uses militarized security schemes to obtain resources from the most impoverished countries, ensure their supply to maintain the standard of living of the rich countries, while closing and protecting the borders against millions of displaced people fleeing armed conflicts, desertification and global warming.

Wars are big businesses that escape democratic control. Wars are businesses that enrich a few while destroying the lives of many other people who have the same right to life as the first. In this context, Ethics requires placing people and their rights at the centre of politics. This is nowadays more important than never before, as we are seeing the development not only of drones that are able to fly themselves – staying aloft for extended periods – but of drones and armed robots which may also be able to select, identify, and destroy targets without human intervention (Burt 2018). In many ways, and although today’s armed drones cannot be considered fully autonomous weapons, the increasing use of remote controlled, armed drones can be seen as a kind of halfway towards the development of truly autonomous weapon systems (Burt 2018). In this context, we claim that a public debate should be held on the ethics and future use of artificial intelligence and autonomous technologies, particularly considering their military applications.

Robotic military systems: Algorithms, automation, deep learning and autonomy

Robots are programmable or self-controlling machines that can perform complex tasks automatically, usually using sensors to analyze their environment. The concept of robotic military systems arises when these robots are used in the military. Fully-automated lethal autonomous weapon systems are known as LAWS.

Many robotic military systems are now automated in some sense. Automation algorithms can be found in their geo-location and driving systems, in the control of their sensors, actuators and weapons, in their health management, but also in targeting, deciding and during attacks.

Algorithms, in turn, can be reliable, heuristic or massively heuristic. We have examples of all of them also in our daily life. Systems using reliable algorithms include GPS systems in our daily life, or weapons like laser-guided bombs in the military (with precisely controlled driving systems). They have no parameters to tune, and potential errors come basically not from their algorithms, but from misuse and human mistakes. Systems based on heuristic algorithms have a number of parameters to tune, and their suitability in the context of a particular application will depend on the ability of the person who has tuned them. They include a good number of the existing drones and automated weapons with targeting capabilities. Parameter setting cannot be optimal, and they are always prone to errors, which must be detected and corrected by human operators.

Anyway, the problem with autonomous, machine-learning based systems (LAWS, but also a number of non-military systems) is much more complex. These systems are obscure, massively heuristic algorithms, based on a number of parameters that can grow up to hundreds of millions. They use greedy machine learning (ML) algorithms which demand huge sets of training data, being also opaque (Potin 2018). Moreover, and as we will next discuss, it is a well-known fact that they have a significant and guaranteed probability of failure.

The structure and limits of deep learning

In the last decades, artificial intelligence (AI) has basically materialized into novel machine learning (ML) algorithms which have been taking off during this last decade. Learning algorithms fall into five main categories: genetic evolutionary algorithms, analogy-based algorithms, symbolic learning systems, Bayesian learning machines and deep learning algorithms (Domingos 2018). In this Section we will focus on the last ones, since they are being used in the design of most autonomous systems including LAWS.

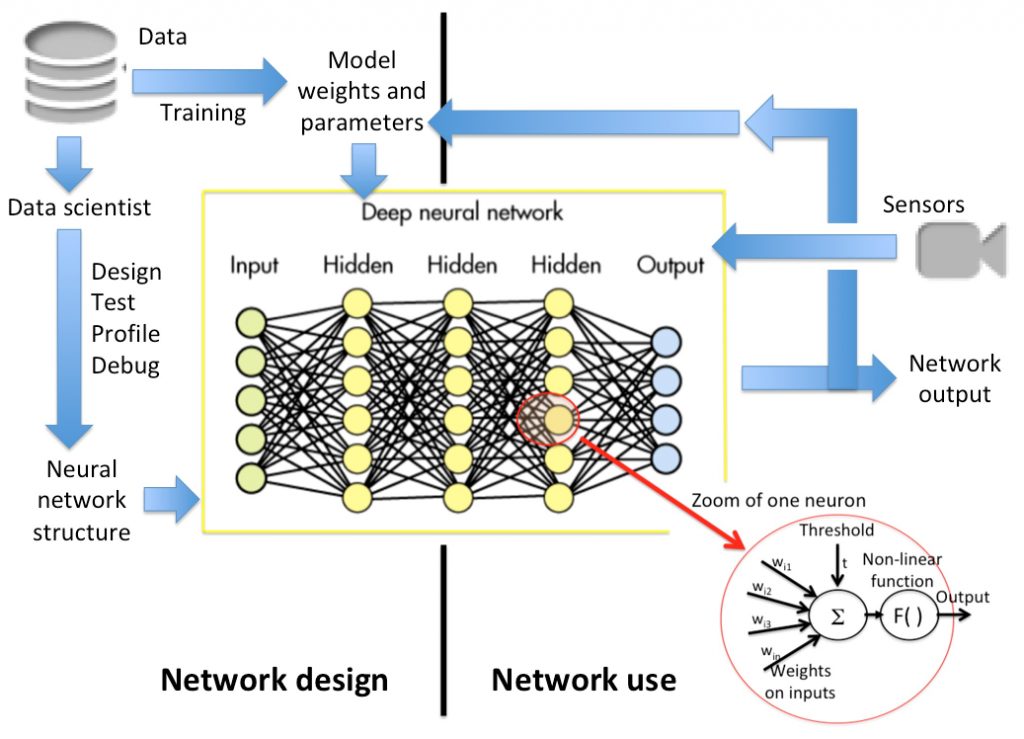

Deep machine learning (DL) systems work in two steps, as shown in Figure 1, which shows a typical DL structure:

Figure 1. Deep learning networks (Figures can be downloaded from: http://www.cs.upc.edu/~pere/Armed_robots_Figures.pdf)

As Jason Potin (Potin 2018) explains, machine learning is just math (linear + non-linear), ML algorithms being statistical. Such networks possess inputs and outputs, like the neurons in our own brains; they are said to be “deep” (DL) when they include multiple hidden layers that contain a huge number of nodes (AI neurons) and a multitude of connections. In other words, the difference between ML and DL is just the number of hidden neuron layers (yellow neurons in Figure 1).

Anyway, ML (or DL) networks must be designed beforehand, as shown on the left part of Figure 1. Data scientists analyse data, and decide the most appropriate network structure. Then, huge amounts of existing data are processed to train the model by optimizing its weights or parameters. This is a time-demanding process. The network should be refined or even redesigned if, when tested and debugged, it does not match its expected performance. Network models are two-fold, including its structure and an optimized parameter setting. Deep learning algorithms use multiple layers to build their computational models. According to Samira Pouyanfar et al. (Pouyanfar, 2018), deep learning can have different network structures, including Recursive Neural Networks, Recurrent Neural Networks, Convolutional Neural Networks (CNN), Generative Adversarial Networks (GAN), or Variational Autoencoders (VAE) among others. In recent few years, generative models such as GANs and VAEs have become dominant techniques for unsupervised deep learning. GAN networks, for instance, consist of a generative model G and a discriminative model D. On every iteration of a GAN learning process, the generator and the discriminator compete between each other: while the generator is trying to generate more realistic outputs to fool and confuse the discriminator, the latter tries to identify and separate real output data from fake data, in the outputs of the generator. It’s like a game of cat and mouse (Pouyanfar, 2018). Learning works by optimizing the weights (parameters) associated to all neuron connections, Figure 1.

After the training phase, the network can already be used. Sensor (and other) information is supplied to the input layer of neurons. Then, every neuron in the ML/DL computes a weighted average of all signals coming from neurons in the previous layer, performs some non-linear operations (through thresholding and activation functions), and sends its output to neurons on the next layer (Figure 1, right). Using a set of non-linear activation functions is essential to ensure that each neuron intervenes in a differentiated way in the final result (otherwise, the whole DL network would become a huge linear system which could be collapsed into a single matrix multiplication). The final network output is often used to improve and tune the network parameters in a sort of dynamic feedback training.

Whereas the initial network training can be very time-consuming, also requiring huge amounts of data (DL networks can have hundreds of millions of neurons and parameters, requiring training data volumes of the same order of magnitude), using DL networks is very fast and efficient. This is due to the fact that mathematical operations at neuron level are extremely simple.

Let us compare the DL network of an automatic translation system (AT) with the DL targeting system in an autonomous weapon (AW). In the AT application, training data comes from big amounts of pairs of texts, one being the translation of the other. During the network use, the input is (a parameterization of) our initial sentence, while the output corresponds to its translation. In AWs, training data may come from intelligence agencies and many other sources, identifying potential targets. During the AW network use, the input comes from sensors in the weapon (cameras etc.) and the output could be, for each element of the camera image, its probability of being a “valid” target.

However, a number of authors like Anh Nguyen (Nguyen, 2015) have shown interesting differences between human vision and current deep neural networks, raising questions about the generality of recognition algorithms based on deep learning. In (Nguyen, 2015), they show that changing an image (e.g. of a lion) in a way imperceptible to humans can cause a deep neural network to label the image as something else like a library. In fact, they claim that it is easy to produce images that are completely unrecognizable to humans, but that state-of-the-art deep learning algorithms believe to be recognizable objects (or humans) with 99.99% confidence. These are the kind of errors that targeting systems in autonomous weapons can show due to inherent DL network errors. They can give a “good answer”, but they can also provide completely nonsense answers, like AT systems sometimes do.

In short, deep learning (DL) algorithms suffer from limited reliability, and a guaranteed probability of failure, which is significant and not small. They are massively heuristic, their success being based on a pleiad of non-linearities (one per neuron) that simply avoid their collapse onto a simple linear matrix. They are therefore opaque because, unlike traditional programs with a formal, debuggable code, the parameters of neural networks are beyond any explicability. DL networks are black boxes with decision processes that are not well understood and whose outputs cannot be explained, raising doubts about their reliability and biases. Moreover, they require huge amounts of training data and they are data-sensitive, suffering from cultural data biases: training AWs to distinguish between suspect individuals and normal individuals is not transferable from one culture to another, for instance. In short, deep learning (DL) algorithms are greedy, brittle, opaque, and shallow (Potin 2018).

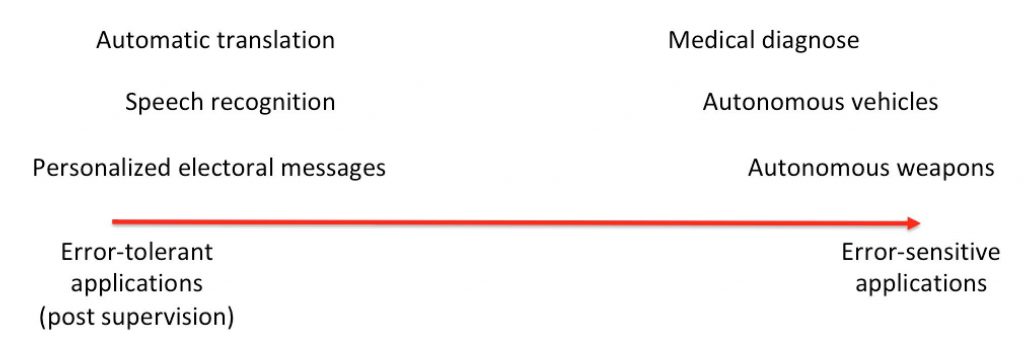

Figure 2. Machine Learning implications in error-tolerant and error-sensitive applications (Figures can be downloaded from: http://www.cs.upc.edu/~pere/Armed_robots_Figures.pdf)

The fact that deep learning (DL) algorithms suffer from a guaranteed probability of failure (which is significant and not small) makes them quite useful in some applications but dangerous in other uses. Machine Learning (ML) systems are useful in error-tolerant applications like automatic translation, provided that they include a post-supervision by humans, see diagram in Figure 2. They can also be successful in ethical-dubious cases like providing personalized electoral messages before elections, because the number of gained votes will be greater than the number of failures. However, they can be problematic in error-sensitive applications like medical diagnose, autonomous vehicles and weapons because of the lack of accountability and because errors can become human victims.

Degree of autonomy in robotic weapons: Ethical issues

Insofar as artificial intelligence (AI) is fuelled by data, AI-based weapons inherit some of its ethical challenges from the debate on data governance, especially consent, ownership and privacy. Also, AI-based weapons, as other AI-based systems, have limited reliability and are data sensitive. Moreover, humans should be able to understand and explain to third parties the whole decision process that is hidden in learning-based deep neural networks.

Figure 3. Degree of autonomy and degree of human intervention (Figures can be downloaded from: http://www.cs.upc.edu/~pere/Armed_robots_Figures.pdf)

Generally speaking, a robot is a tool and, as such, it is never legally responsible for anything. Procedures for attributing responsability for robots should be established, so that it will always be possible to determine who is legally responsible for their actions (Boden et al. 2017). Moreover, the implications of the transformations concealed behind the apparently neutral technological robot development must be investigated, placing the concepts of nature and human dignity as insurmountable limits (Palmerini et al. 2016). To ensure accountability, some authors (Winfield 2017) propose that robots and autonomous systems should be equipped with an “Ethical Black Box” which is the equivalent of a Flight Data Recorder that continuously records sensor and relevant internal status data. The ethical black box would be critical to the process of discovering why and how a robot caused victims, being an essential part of establishing accountability and responsibility.

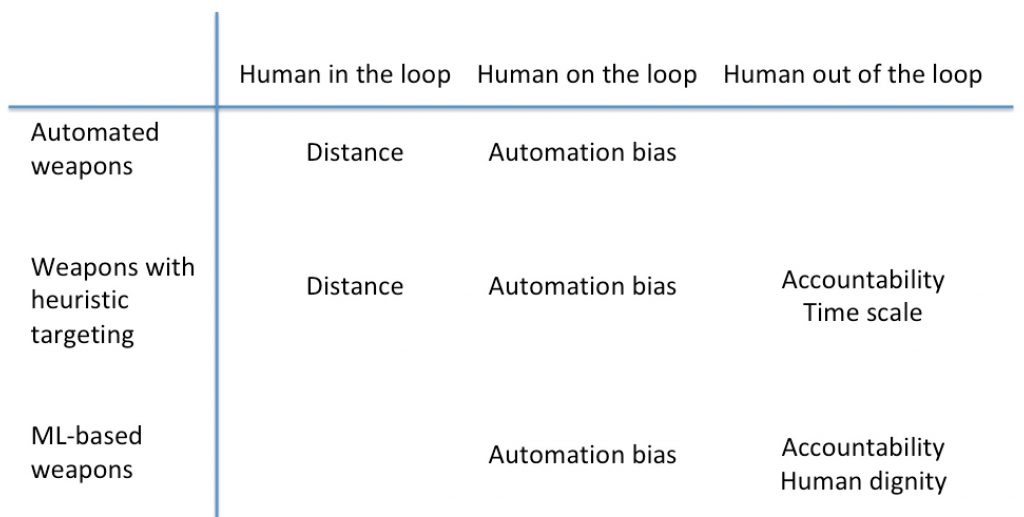

In (Boulanin, 2017) a weapons classification is proposed based on the degree of human intervention: robotic weapons can be classified on those requiring a human person in the decision circuit (“human in the loop”), those including some mechanism of human supervision (“human on the loop”) and those that, being autonomous, do not require any human intervention (“human out of the loop”) as is the case of LAWS. When confronted with the three different degrees of autonomy discussed in the previous Section (automated weapons, weapons having heuristic targeting and autonomous weapons), we obtain 7 possible weapon types, as shown in Figure 3 (the options automated-out_of_the_loop and autonomous-in_the_loop do not exist).

Robotic weapons that require a human person in the decision circuit are the most widespread. They include systems and drones with remote control like the Terminator of Lockheed Martin (USA), the SkyStriker of Elbit Systems (Israel), the Warmate of WB Electronics (Poland), the XQ-06 Fi of Karal Defense (Turkey), the CH -901 from China and many others. Some of them are already used in surveillance, control and attack tasks on people at borders, such as Super aEgis II in the demilitarized zone between the two Koreas. Its use in surveillance functions in walls and borders is increasingly widespread. In any case, there is currently an important ethical debate (N. Sharkey) on the use of this type of systems that require a human person in the decision circuit. While some authors defend the opportunity of its use, authors like Medea Benjamin (Benjamin 2013) consider that when military operations are carried out through the filter of a far-off video camera, the possibility of visual contact with the enemy disappears, with which the perception of cost decreases human of the possible attack. On the other hand, Markus Wagner (Wagner 2014) explains that disconnection and distance creates an environment in which it is easier to commit atrocities. And, in any case, as Philip Alston indicates, “the use of drones to selectively kill out of concrete armed contexts, will almost never be legal” (Alston 2014). Also, Alex Leveringhaus (Leveringhaus 2017) says that the intentional or unintentional use of distance to obscure responsibility in situations of conflict in which weapons are used, indicates a profound lack of respect for the rights of persons, and by extension to the moral dignity of persons and individuals, since we all deserve equal consideration and respect. This is true for automated systems like laser-guided bombs and for weapons having heuristic targeting systems with human control.

Regarding “human on the loop” weapons like Samsung’s SGR A-1, Noel Sharkey (Sharkey 2014) refers to the automation bias, which undermines their ethical support. According to Sharkey, “Operators are prepared to accept the computer recommendations without seeking any disconfirming evidence. The time pressure will result in operators falling foul of all downsides of automatic reasoning: neglects ambiguity and suppresses doubt, infers and invents causes and intentions, is biased to believe and confirm, focuses on existing evidence and ignores absent evidence”. Even if human operators can push the red button to stop the robotic weapon, the automation bias will (often or seldom) influence them, inducing them to follow the actions proposed by robotic systems, with fatal consequences for targeted individuals.

According to George Woodhams (Woodhams, 2018), human error and reliability and safety issues will continue to contribute to armed UAVs being used in ways unintended by their operators. Conversely, it is not inconceivable that operators will use armed UAVs in potentially escalatory ways while claiming error or malfunction as cover for their actions. This is something to consider in related ethical analysis.

Completely autonomous weapons with humans out of the loop are still under development. At present human control is maintained over the use of force, and so today’s armed drones do not qualify as fully autonomous weapons (Burt 2018). Anyway, ethical concerns are much stronger in this case, with a high degree of consensus. As debates continue regarding the ethical and legal implications of increasingly autonomous weapons systems, future LAW systems will have even greater implications for conflict escalation and inter-State conflict than current-generation armed UAVs, (Woodhams, 2018). There is a general agreement that there is a ‘red line’ beyond which increasing autonomy in weapons systems is no longer acceptable (Kayser, 2018). The question is whether systems with the capability to make autonomous targeting decisions are able to comply with the laws of war. According to Peter Burt (Burt 2018), it is clear that the development and deployment of lethal autonomous drones would give rise to a number of grave risks, primarily the loss of humanity and compassion on the battlefield: “Letting machines ‘off the leash’ and giving machines them the ability to take life crosses a key ethical and legal Rubicon. Autonomous lethal drones would simply lack human judgment and other qualities that are necessary to make complex ethical choices on a dynamic battlefield, to distinguish adequately between soldiers and civilians, and to evaluate the proportionality of an attack. Other risks from the deployment of autonomous weapons include unpredictable behaviour, loss of control, ‘normal’ accidents, and misuse. A growing community of scientists, who are against autonomous weapon systems and who object to collaborate in their development, confirms this fact. This is something essential, as criticism and objection should become driving forces of the new ethical-based framework. In 2018, 2,400 researchers in 36 countries joined 160 organizations in calling for a global ban on lethal autonomous weapons. They argued that such systems pose a grave threat to humanity and have no place in the world (see McFarland 2018 and Sample 2018). The signatories pledged to “neither participate in nor support the development, manufacture, trade, or use of lethal autonomous weapons.”. Some company leaders (Gariepy, 2017) are also objecting to participate in their development. Moreover, international campaigns like Stop Killer Robots are calling for a global ban on completely autonomous weapons (LAWS) which leave humans out of the loop.

As stated by Tony Jenkins, Kent Shifferd and others in the 2018-2019 report of World Beyond War (Jenkins 2018), the prohibition of all militarized drones by all nations and groups would be a great step on the road to a demilitarized safety.

Conclusions

Instead of the “business ethic of a few”, the ethical approach based on the needs of all people implies leaving behind this extended “well-having” concept that bases welfare on the depredation of the resources of others. It also implies ceasing to prioritize economic benefits as the central objective of our civilization, ending armed conflicts and the arms trade, and working towards the negotiated settlement of conflicts. All of this, together with a recovery of democratic control at a global level, strict regulation of business and profits of large corporations, and a global action plan aimed at meeting the basic needs of all people. The era of business based on violence against the dispossessed and on the security of those who are already secure, should end.

Insofar as artificial intelligence and deep learning algorithms are fuelled by data, autonomous and ML-based weapons inherit some of its ethical challenges from the debate on data governance, especially consent, ownership and privacy. Also, AI-based weapons, as other AI-based systems, have limited reliability and are data sensitive. Having a significant, guaranteed probability of error, ML-based systems with deep neuron networks can be used in error-tolerant applications, but are ethically unacceptable when their errors are measured as the number of human victims. Autonomous weapons are a threat to the international humanitarian law, to human rights, and to human dignity.

Lethal autonomous weapon systems pose a grave threat to humanity and should have no place in the world. Moreover, once developed these technologies will likely proliferate widely and be available to a wide variety of actors, so the military advantage of these systems will be temporary and limited (Kayser, 2018). Completely autonomous weapons (LAWS) that leave humans out of the loop, should be banned. Moreover, the prohibition of all militarized drones by all nations and groups would be a great step on the road to a demilitarized safety.

Also, a new scenario is arising where robotic weapons can be easily assembled from on-the-shelf components by any almost country or organization. This will have possible future implications regarding automated weapon production and trade.

As proposed by scientists concerned about climate change (Brunet 2018), the solution must come from a groundswell of organized grassroots efforts, in a way that dogged opposition can be overcome, with political leaders being compelled to do the right thing according to scientific evidences, in the framework of a new global democracy. And this requires signing a global ban on autonomous weapons, as a first step towards banning all militarized drones and robotic weapons by all nations and groups.

References

Alston, Philip (U.N. Doc. No. A/HRC/14/24/Add.6, May 28, 2010): in Markus Wagner (2014), see below, pp. 1378. Also in David Hookes (2017): https://www.globalresearch.ca/armed-drones-how-remote-controlled-high-tech-weapons-are-used-against-the-poor/5582984

Benjamin, Medea (2013), Drone Warfare: Killing by Remote Control (London, Verso): https://scholar.google.com/scholar_lookup?title=Drone%20Warfare%3A%20Killing%20by%20Remote%20Control&author=M.%20Benjamin&publication_year=2013

Boden M., Bryson J., Caldwell D., Dautenhahn K., Edwards L., Kember S., Newman P., Parry V., Pegman G., Rodden T., Sorrell T., Wallis M., Whitby B. and Winfield A.F. (2017) Principles of robotics: Regulating robots in the real world. Connection Science, 29(2): 124-129 : https://www.tandfonline.com/doi/abs/10.1080/09540091.2016.1271400

Boulanin, Vincent & Verbruggen, Maaike (2017), Mapping the Development of Autonomy in Weapon Systems, Estocolmo, SIPRI: https://www.sipri.org/sites/default/files/2017-11/siprireport_mapping_the_development_of_autonomy_in_weapon_systems_1117_1.pdf

Brunet, Pere (2018): “Ethics, peace and militarism: an approach from science”, talk at Tonalestate, August 2018: https://goo.gl/1Q2Foa

Burt, Peter (2018): “Off the Leash: The development of autonomous military drones in the UK”, Drone Wars UK: https://dronewarsuk.files.wordpress.com/2018/11/dw-leash-web.pdf

Domingos, Pedro (2018): Artificial Intelligence Will Serve Humans, Not Enslave Them, Scientific American, September 2018: https://www.scientificamerican.com/article/artificial-intelligence-will-serve-humans-not-enslave-them/

Gariepy, Ryan (2017): https://www.clearpathrobotics.com/2017/08/clearpath-founder-signs-open-letter-un-ban- autonomous-weapons/

Jenkins Tony, Kent Shifferd, Patrick Hiller, David Swanson (2018), “A Global Security System: An Alternative to War”, World BEYOND War, pp. 59: https://worldbeyondwar.org/alternative/

Kayser, Daan and Beck, Alice (2018): Crunch Time, PAX Report, ISBN: 978-94-92487-31-5, November 2018: https://www.paxvoorvrede.nl/media/files/pax-rapport-crunch-time.pdf

Leveringhaus, Alex (2017), “Autonomous weapons mini-series: Distance, weapons technology and humanity in armed conflict”:

McFarland, Mac (2018): Leading AI researchers vow to not develop autonomous weapons: https://money.cnn.com/2018/07/18/technology/ai-autonomous-weapons/index.html

Nguyen, Anh (2015): Deep Neural Networks are Easily Fooled: High Confidence Predictions for Unrecognizable Images, IEEE CVPR 2015: https://www.cv-foundation.org/openaccess/content_cvpr_2015/papers/

Palmerini E., Azzarri F., Battaglia F., Bertolini A., Carnevale A., Carpaneto J., Cavallo F., Carlo A.D., Cempini M., Controzzi M., Koops B.J., Lucivero F., Mukerji N., Nocco L., Pirni A., Shah H., Salvini P., Schellekens M., Warwick K. (2016) Robolaw: Guidelines on Regulating Robotics : http://www.robolaw.eu/RoboLaw_files/documents/robolaw_d6.2_guidelinesregulatingrobotics_20140922.pdf

Potin, Jason (2018): Greedy, Brittle, Opaque, and Shallow: The Downsides to Deep Learning, Wired Ideas 2018: https://www.wired.com/story/greedy-brittle-opaque-and-shallow-the-downsides-to-deep-learning/

Pouyanfar, Samira et al. (2018): A Survey on Deep Learning: Algorithms, Techniques, and Applications, ACM Computing Surveys, Volume 51 Issue 5, November 2018: https://dl.acm.org/citation.cfm?id=3234150

Sample, Ian (2018): Statement of the Joint International Conference on Artificial Intelligence (Stockholm, 18 July 2018): https://www.theguardian.com/science/2018/jul/18/thousands-of-scientists-pledge-not-to-help-build-killer-ai-robots

Sharkey, Noel (2009): Ethics debate: https://robohub.org/robots-robot-ethics-part-1/

Sharkey, Noel (2014): “Towards a principle for the human supervisory control of robot weapons” : https://www.unog.ch/80256EDD006B8954/(httpAssets)/2002471923EBF52AC1257CCC0047C791/$file/Article_Sharkey_PrincipleforHumanSupervisory.pdf

Wagner, Markus (2014): “The Dehumanization of International Humanitarian Law: Legal, Ethical and Political Implications of Autonomous Weapon Systems”, Vanderbilt Journal of Transnational Law, Vol. 47, pág. 1380: https://www.researchgate.net/profile/Markus_Wagner11/publication/282747793_The_Dehumanization_of_International_Humanitarian_Law_Legal_Ethical_and_Political_Implications_of_Autonomous_Weapon_Systems/links/561b394b08ae78721f9f907a/The-Dehumanization-of-International- Humanitarian-Law-Legal-Ethical-and-Political-Implications-of-Autonomous-Weapon-Systems.pdf

Winfield, Alan, and Jirotka, Marina (2017): “The Case for an Ethical Black Box”, in the Proc. of the Annual Conference Towards Autonomous Robotic Systems TAROS 2017, Towards Autonomous Robotic Systems, pp. 262-273: https://link.springer.com/chapter/10.1007/978-3-319-64107-2_21

Woodhams, George and Borrie, John (2018): Armed UAVs in conflict escalation and inter-State crisis, The United Nations Institute for Disarmament Research (UNIDIR) 2018: http://www.unidir.org/files/publications/pdfs/armed-uavs-in-conflict-escalation-and-inter-state-crises-en-727.pdf

Further links:

Two Dual-use examples: armed commercial drones: https://nationalinterest.org/blog/buzz/reality-armed-commercial-drones-33396 and vehicles for military and commercial operations: https://www.unmannedsystemstechnology.com/2018/10/general-dynamics-mission-systems-launches-redesigned-bluefin-9-auv/

Randall Munroe, Patrick:

Akkerman, Mark at The Electronic Intifada 31 October 2018: Following Operation Cast Lead, Israel’s assault on Gaza in late 2008 and early 2009, a Human Rights Watch investigation concluded that dozens of civilians were killed with missiles launched from drones. The Heron was identified as one of the main drones deployed in that offensive. Now, Frontex is enabling Israel’s war industry to adapt technology tested on Palestinians for surveillance purposes: https://electronicintifada.net/content/will-europe-use-israeli-drones-against-refugees/25866

Stop Killer Robots

The OFFensive Swarm-Enabled Tactics program

The Super aEgis-II

Armed commercial drones

The prospect of machines with the discretion and power to take human life is morally repugnant,” Mr. Guterres said

Samsung SGR-A1

Also: https://en.wikipedia.org/wiki/SGR-A1

On October 2016, Turkey defence minister Isik confirmed that armed UAVs had killed 72 PKK fighters in the Hakkari region. This was the first official government confirmation that armed UAVs were being used: https://www.dailysabah.com/war-on-terror/2016/11/19/turkeys-uav-control-center-expected-to-boost-success-rate-in-counterterrorism-operations